Use post flagging tools to teach basic media literacy to the masses

A modest fake news proposal for Facebook. We should consider whether platforms like Facebook have an obligation to train their users on how to effectively use their platform.

Facebook appears to be rolling out the features it announced last year that label fake news as “disputed” and allow users to join established fact-checking organizations in reporting false news. This is a much-needed first step in the fight against news designed to deliberately mislead audiences on social media. But Facebook could have a deeper, more lasting impact if it used this new flagging feature to teach basic verification skills to its users.[1] Facebook already has the tools to implement a basic media literacy screener. It just has to extend them to its fake news flagging.

Facebook’s Existing Flagging Features

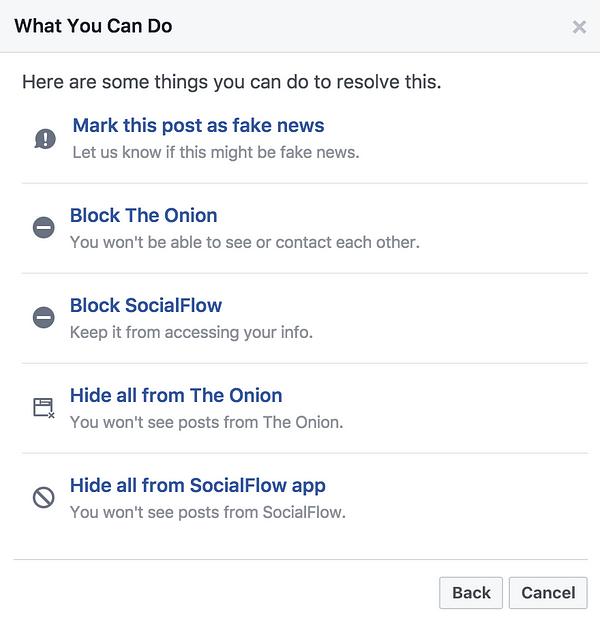

Here’s how it could work. Right now, when you report fake news, the only action items it gives you are marking a post as fake news or blocking the page entirely.

Yes, I deliberately chose to use a post from a satire site so as not to appear biased against a particular source.

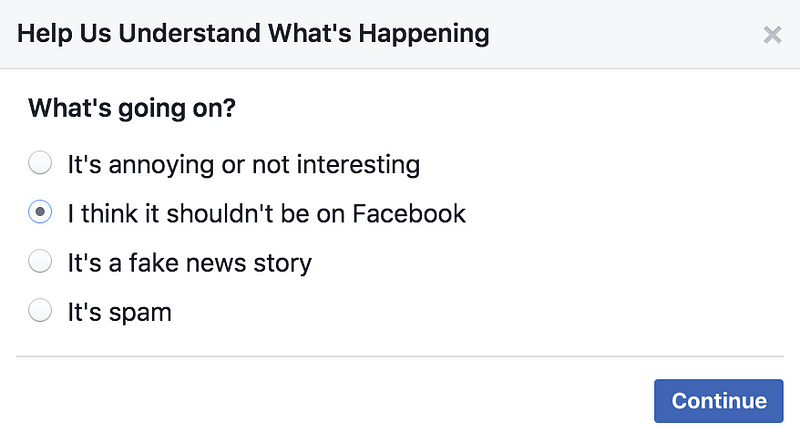

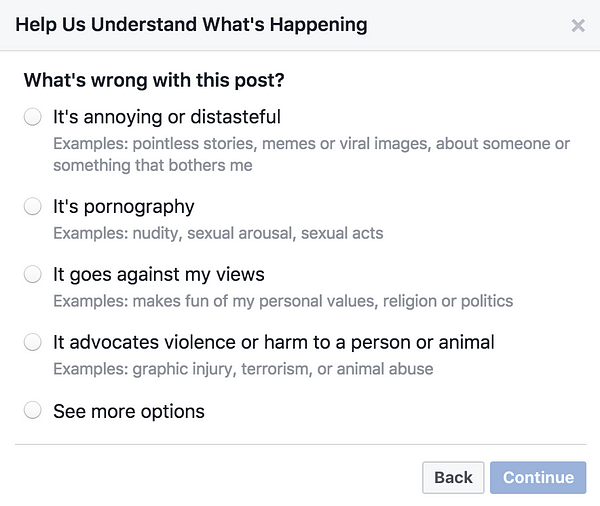

When you report a post for any other reason, however, you’re asked to help Facebook understand why you’re reporting the post.

In fact, you can’t report the post without selecting an option. If you select “See more options” and “It’s something else,” Facebook doesn’t let you file a report, just block the source.

The Media Literacy Screener

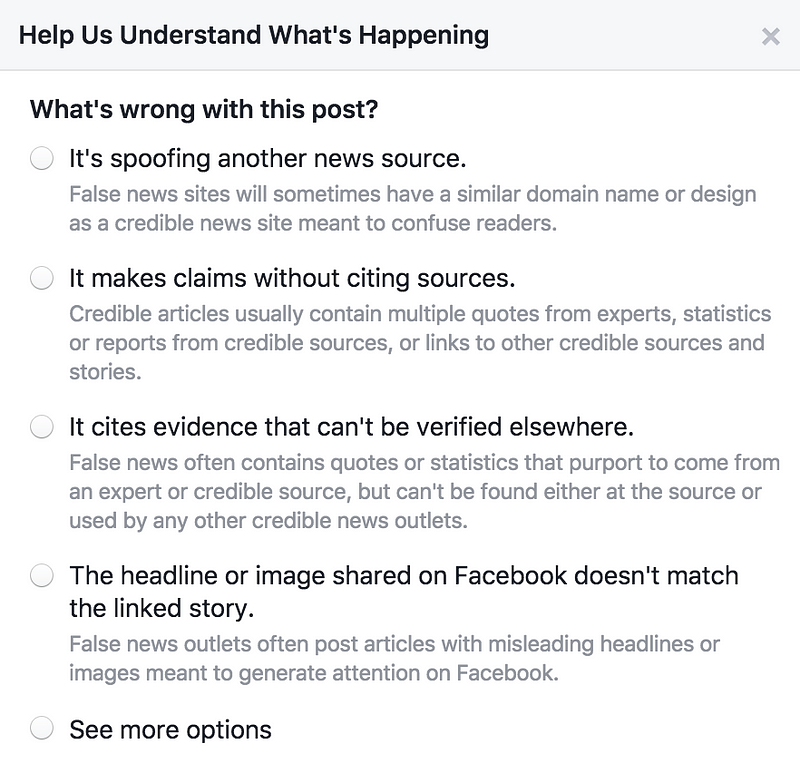

Imagine if Facebook employed a similar screener for fake news reports. Instead of flagging something in one click, you would be required to choose from a list of common fact-checking red flags. Here’s a mockup I made by cribbing from this guide to self fact-checking.

The theory behind this is simple, and similar to a Norweigan site’s new approach to fighting comment trolls (thanks to Heidi Moore for the comparison). I suspect many people, from across the political spectrum, will flag news that isn’t fake at all, but simply doesn’t conform to their beliefs. As a result, Facebook will either rely heavily on the professional fact-checking organizations it’s working with and/or build some kind of user reputation score for fact-checking to weigh fake news reports. In addition to those techniques, it should use fake news flagging as an opportunity to teach users basic media literacy and get better flagging data to boot, potentially without triggering confirmation bias.[2]

As a teaching tool, it could be effective. The idea first came to me (I’m sure others have had it, too) after reading elipariser’s crowdsourced Design Solutions For Fake News document a few months ago.[3] Lots of people mentioned one underlying cause of misinformation — bad media literacy — and recommended fixing it by adding media literacy education to school curriculums. While I agree that adding media literacy training to formal education is a good approach, that solution could take decades to have an impact. It also doesn’t take into account a growing body of evidence that adults learn a great deal in informal settings throughout their lives, potentially more than they learn at school.

To be clear: I don’t think this is any kind of silver bullet. It’s one idea that seems like it would be simple to implement and add one more tool to fight misinformation. Even if you think this particular idea isn’t useful, I’d ask you to consider this: platforms like Facebook have fundamentally changed the way we receive and process information. We should think about what role they should play in helping their users healthfully adjust to the new media dynamics they’ve created.

Notes & Discussions

- Whether and how Facebook should teach media literacy to its users is a discussion worth having, and I’d love to hear your thoughts there. Personally, I think it not only should, but has an obligation to teach media literacy for the internet age given its worldwide impact on how we process news and information.

- There does seem to be some evidence in research for training and nudging as a debiasing strategy, but I’m not enough of an expert on the field to link to a paper on the subject, and articles are scarce.

- I added the idea to Pariser’s doc at the time, but it seems to have since been either folded into other ideas or removed. If you’re super curious, you can see it on page 86 in this revision.

By Gabriel Stein on March 4, 2017.

Exported from Medium on October 22, 2020.